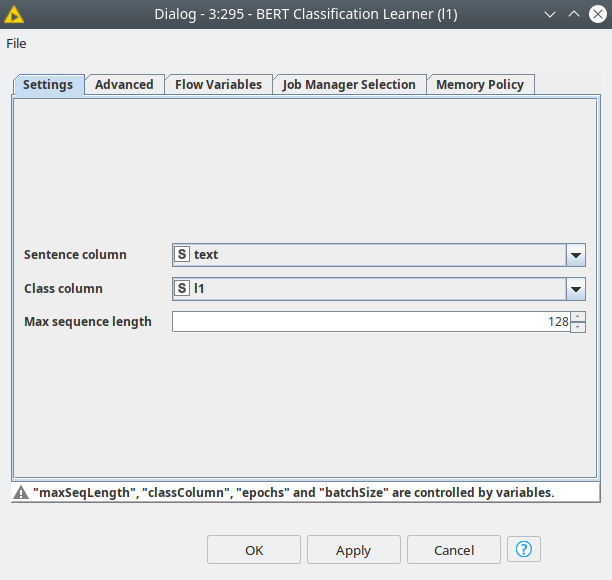

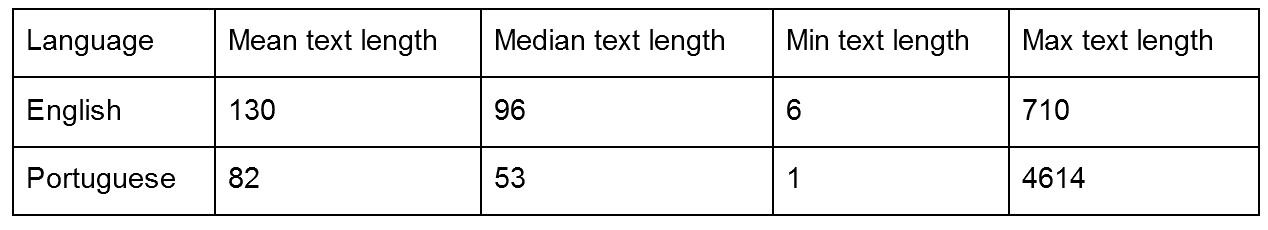

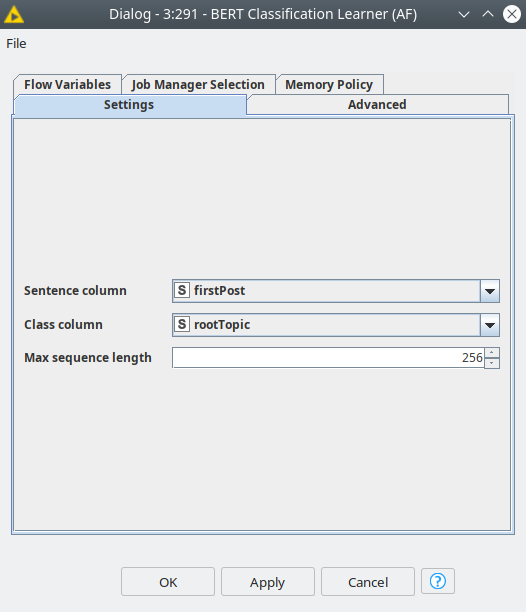

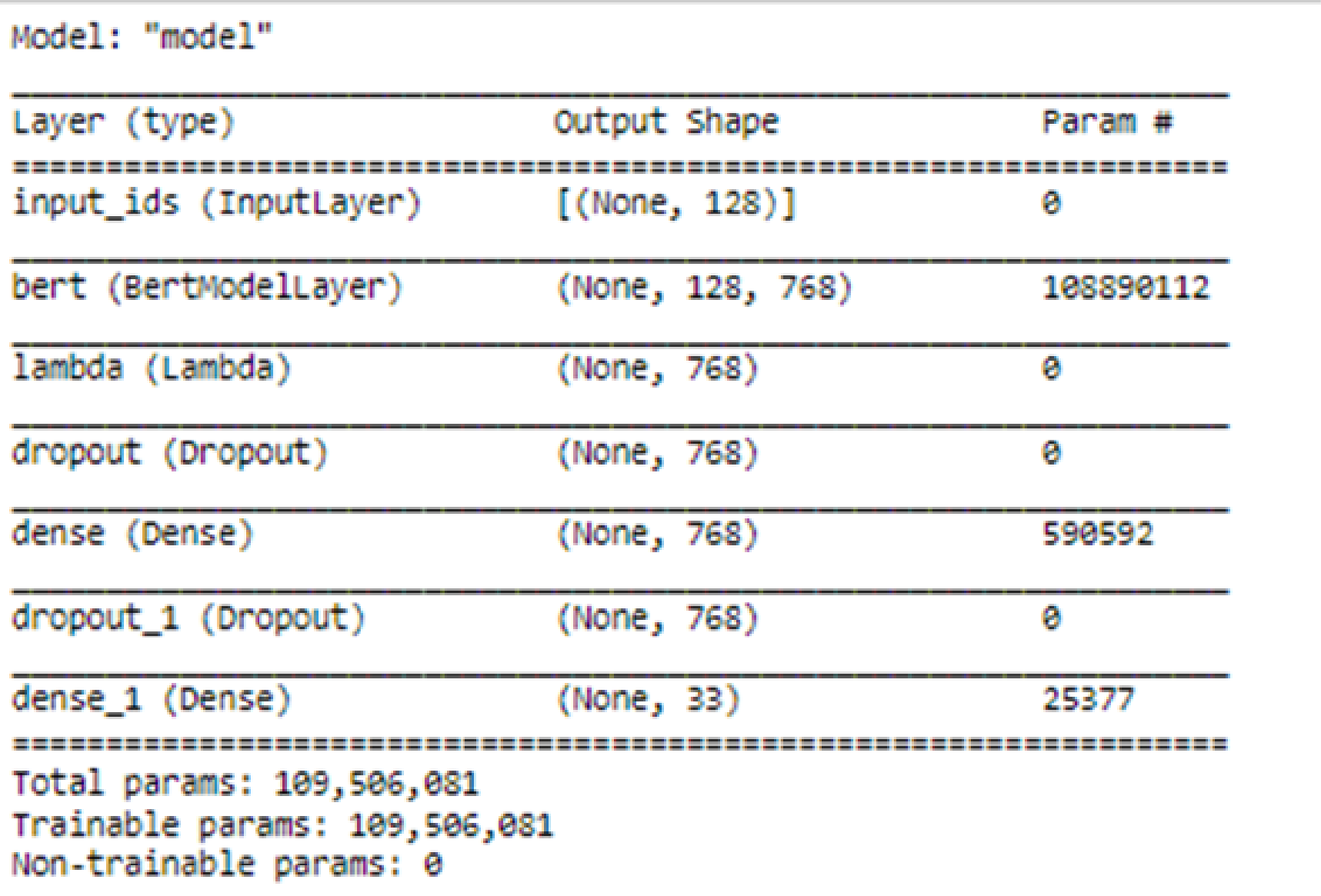

Automatic text classification of actionable radiology reports of tinnitus patients using bidirectional encoder representations from transformer (BERT) and in-domain pre-training (IDPT) | BMC Medical Informatics and Decision Making | Full Text

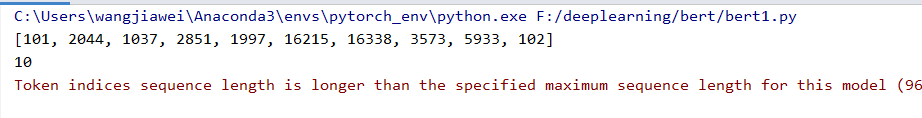

token indices sequence length is longer than the specified maximum sequence length · Issue #1791 · huggingface/transformers · GitHub

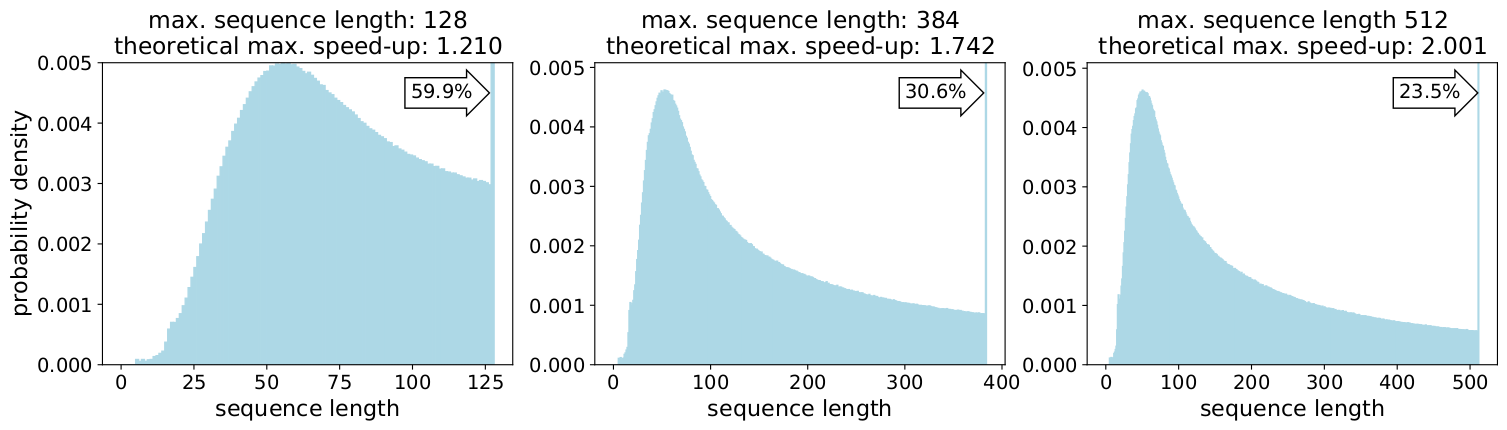

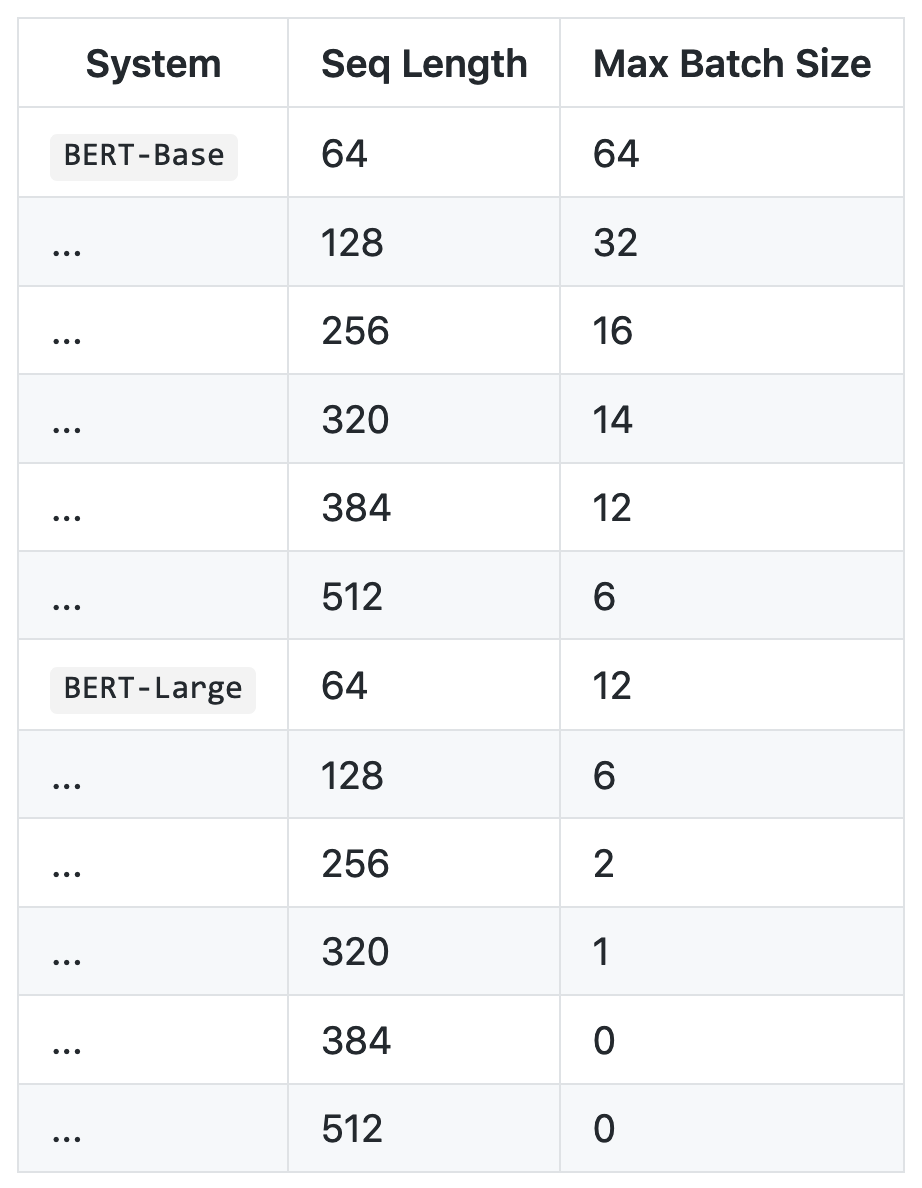

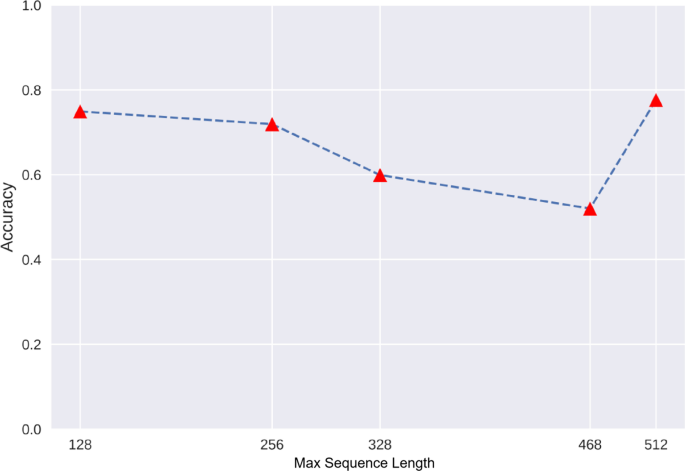

Results of BERT4TC-S with different sequence lengths on AGnews and DBPedia. | Download Scientific Diagram