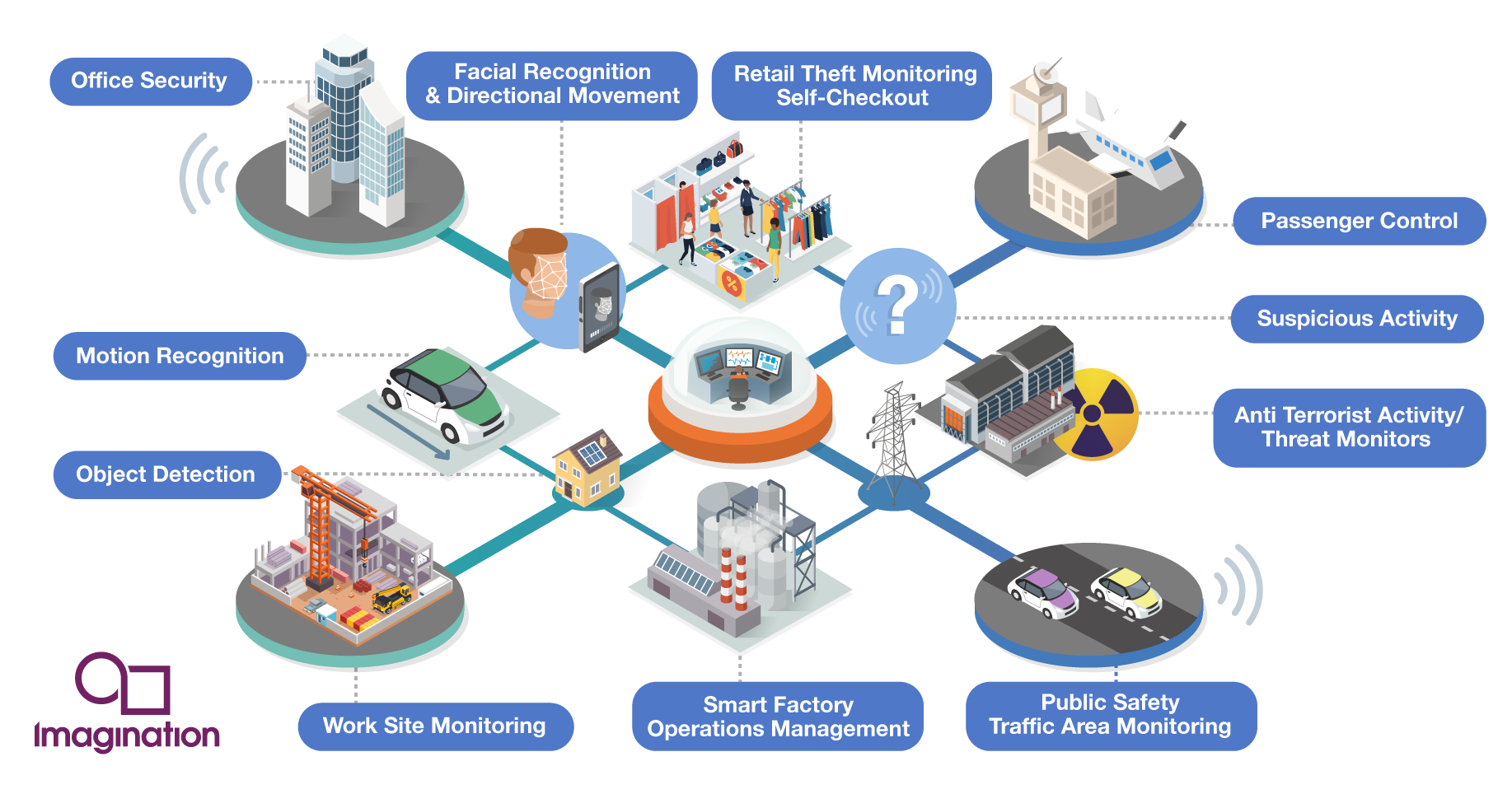

Embedded deep learning creates new possibilities across disparate industries | Vision Systems Design

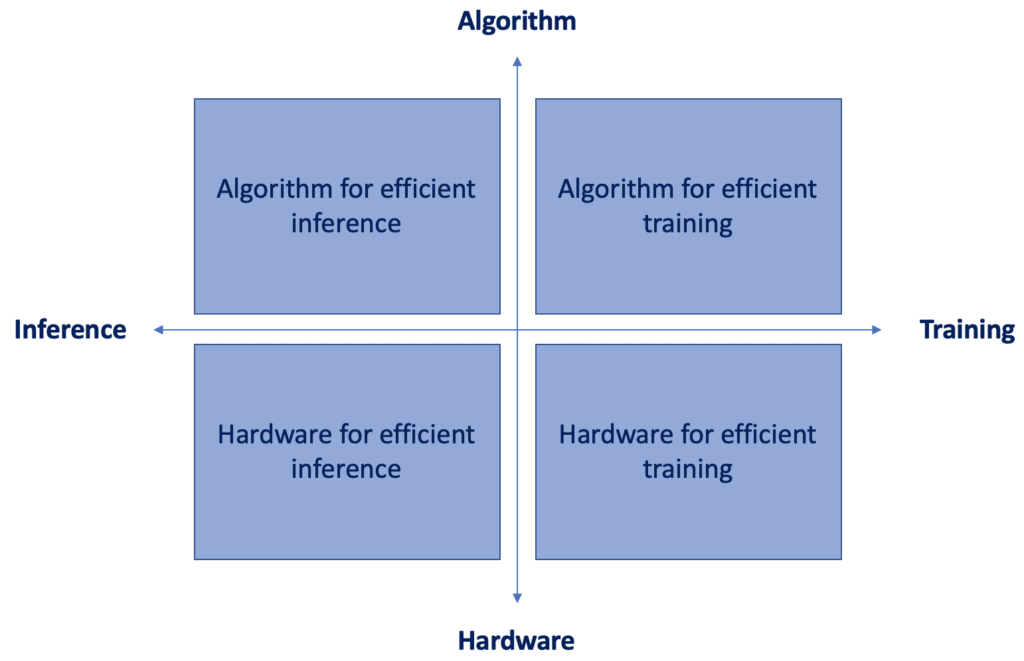

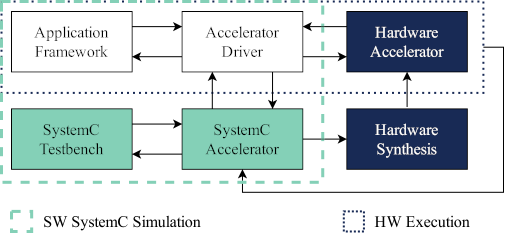

SECDA: Efficient Hardware/Software Co-Design of FPGA-based DNN Accelerators for Edge Inference | Perry Gibson 🍐

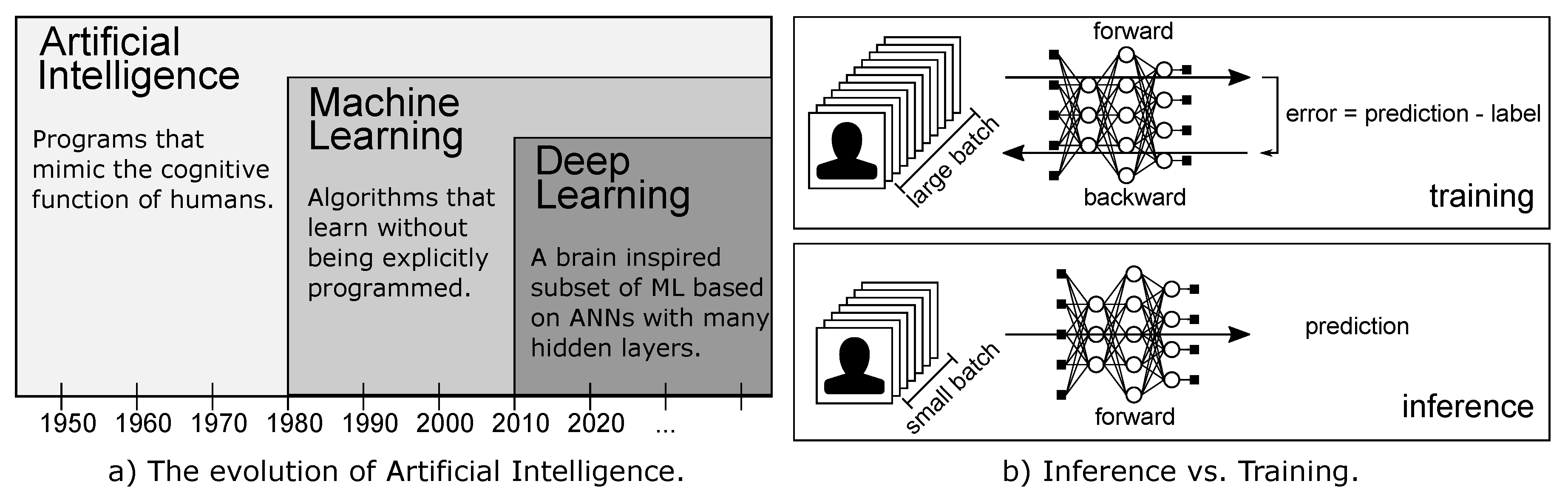

Future Internet | Free Full-Text | An Updated Survey of Efficient Hardware Architectures for Accelerating Deep Convolutional Neural Networks

Wenqi Jiang, Zhenhao He, Shuai Zhang, Thomas B. Preußer, Kai Zeng, Liang Feng, Jiansong Zhang, Tongxuan Liu, Yong Li, Jingren Zhou, Ce Zhang, Gustavo Alonso · Oral: MicroRec: Efficient Recommendation Inference by

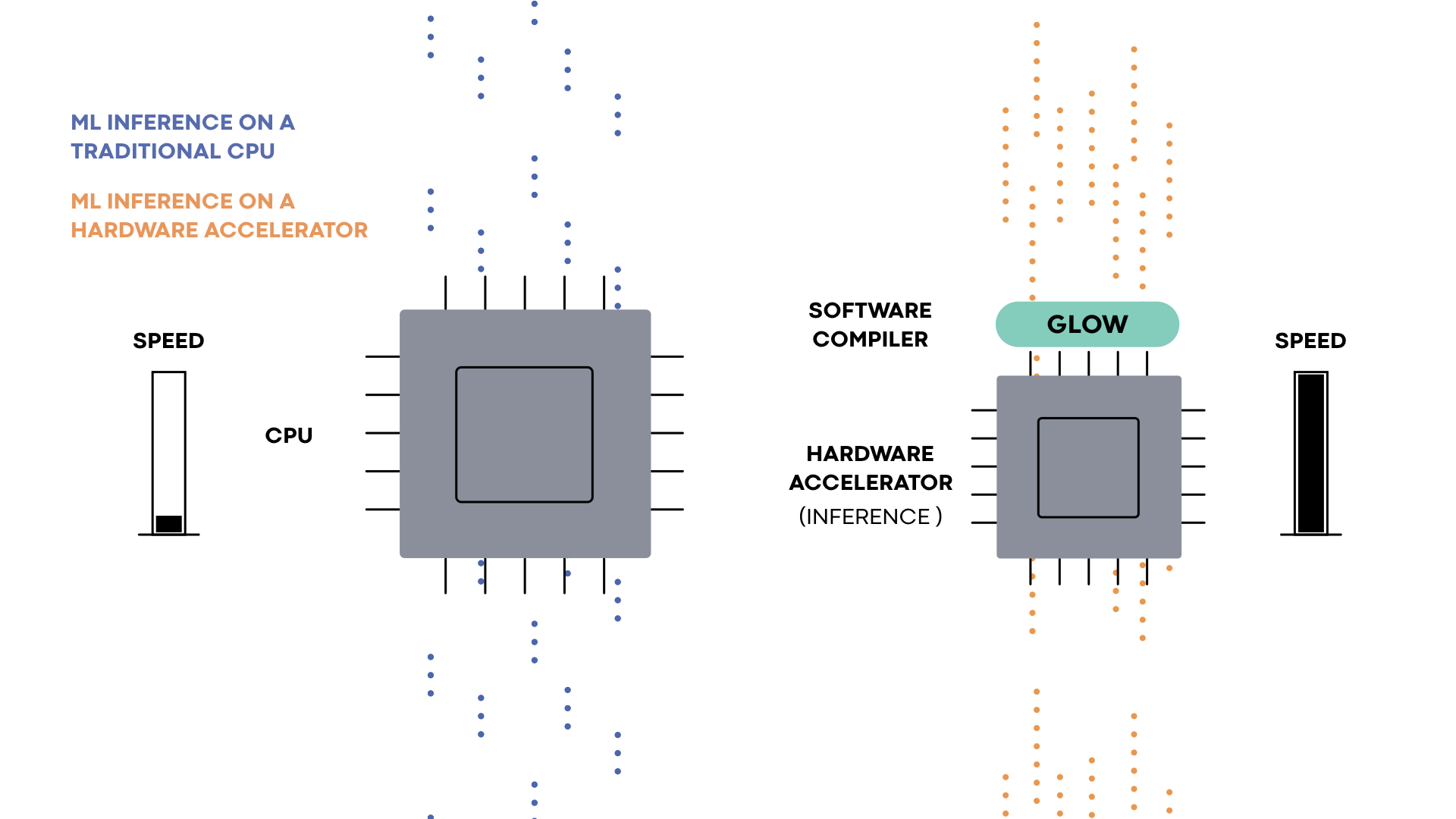

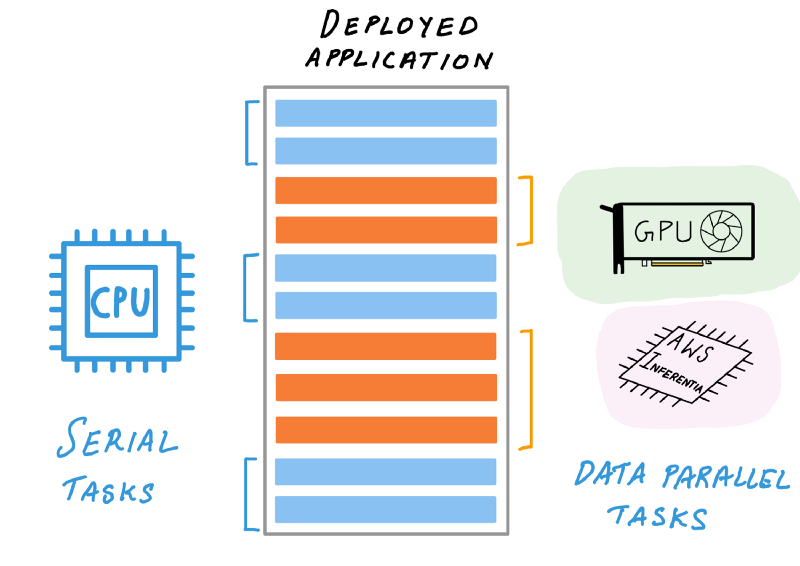

A complete guide to AI accelerators for deep learning inference — GPUs, AWS Inferentia and Amazon Elastic Inference | by Shashank Prasanna | Towards Data Science

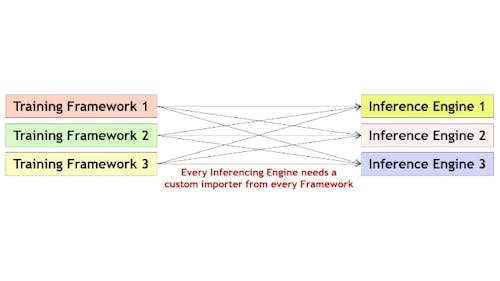

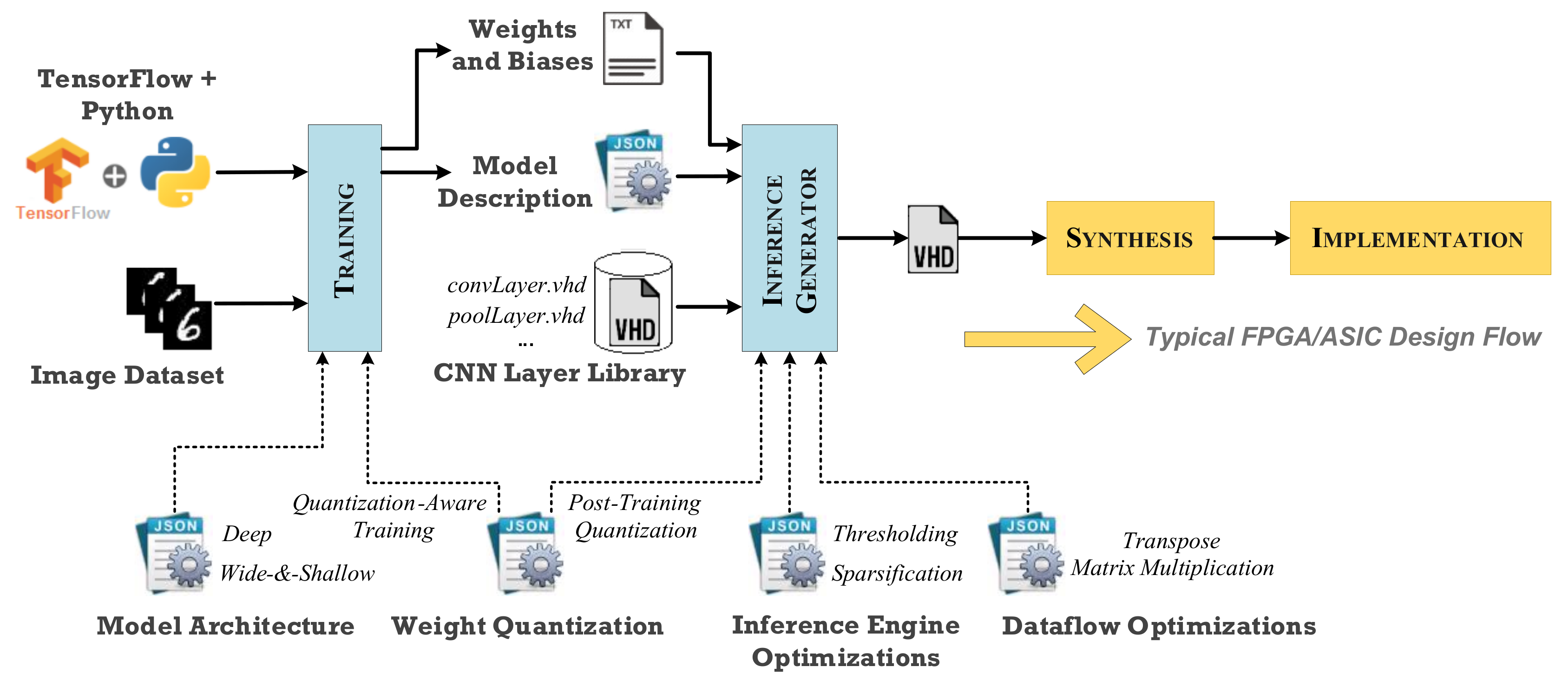

Technologies | Free Full-Text | A TensorFlow Extension Framework for Optimized Generation of Hardware CNN Inference Engines