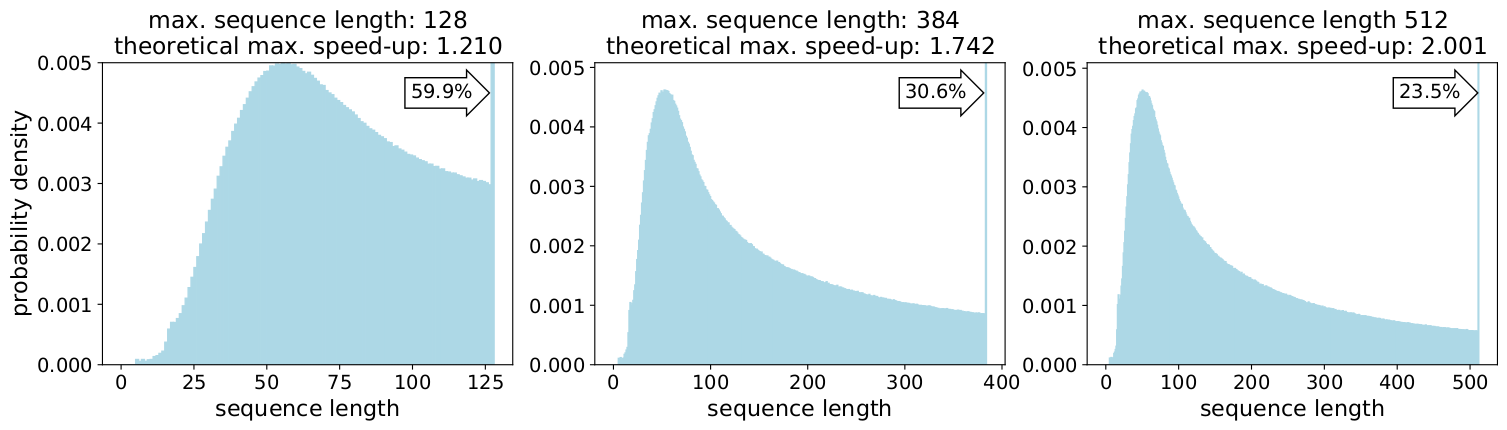

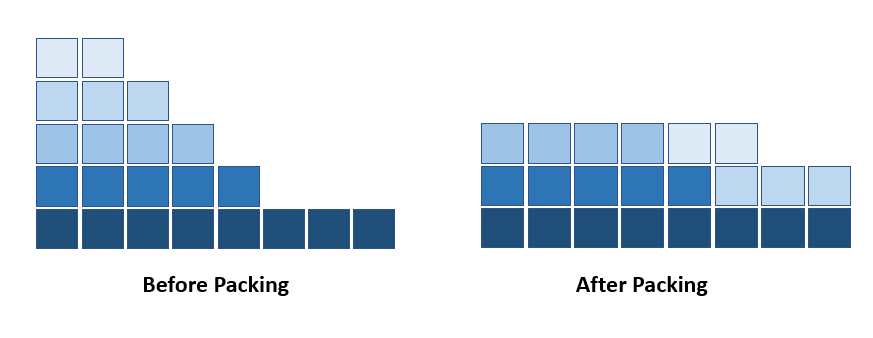

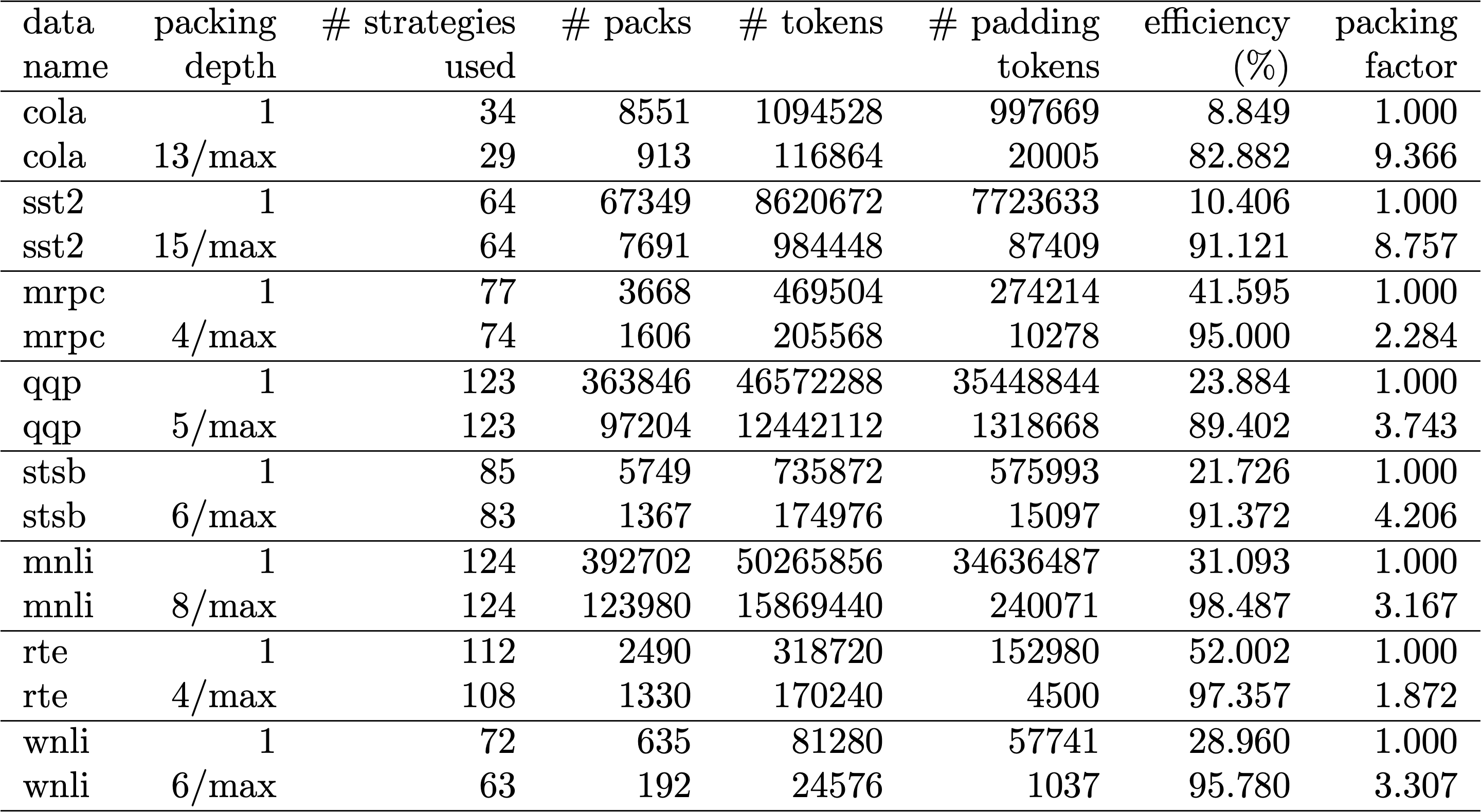

Introducing Packed BERT for 2x Training Speed-up in Natural Language Processing | by Dr. Mario Michael Krell | Towards Data Science

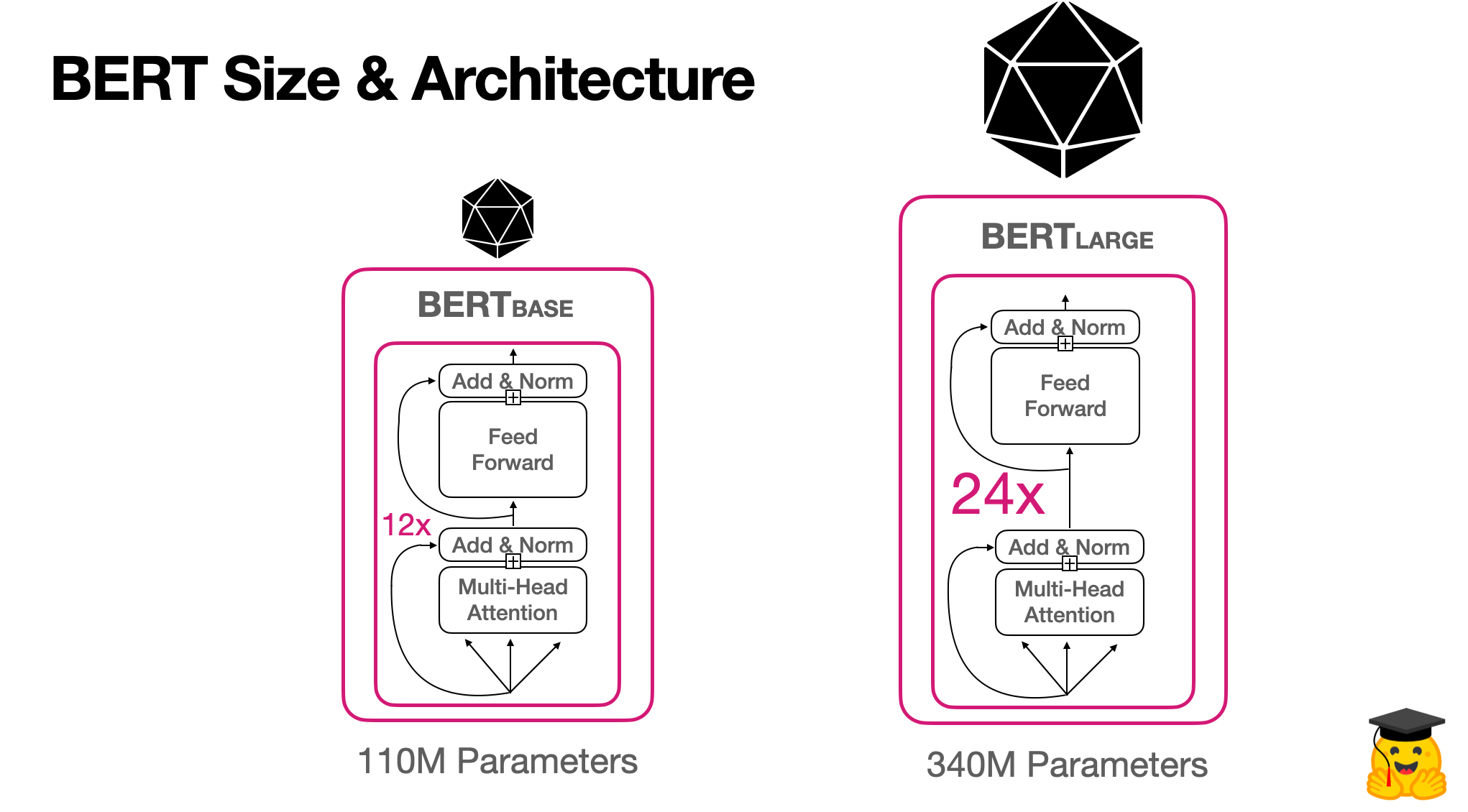

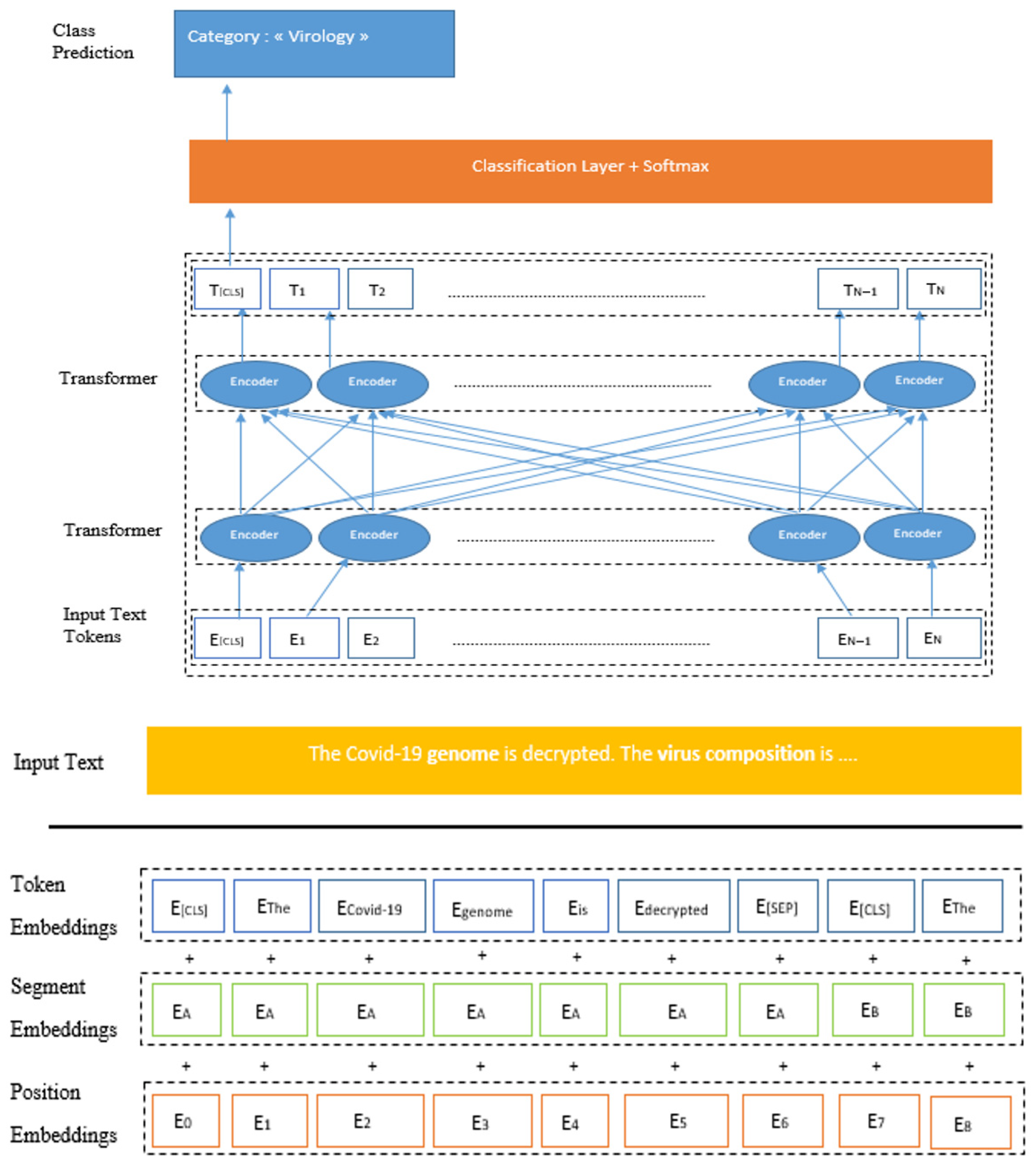

Applied Sciences | Free Full-Text | Survey of BERT-Base Models for Scientific Text Classification: COVID-19 Case Study

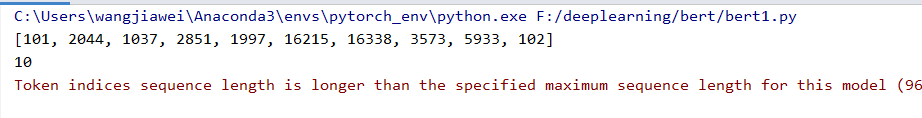

token indices sequence length is longer than the specified maximum sequence length · Issue #1791 · huggingface/transformers · GitHub

deep learning - Why do BERT classification do worse with longer sequence length? - Data Science Stack Exchange

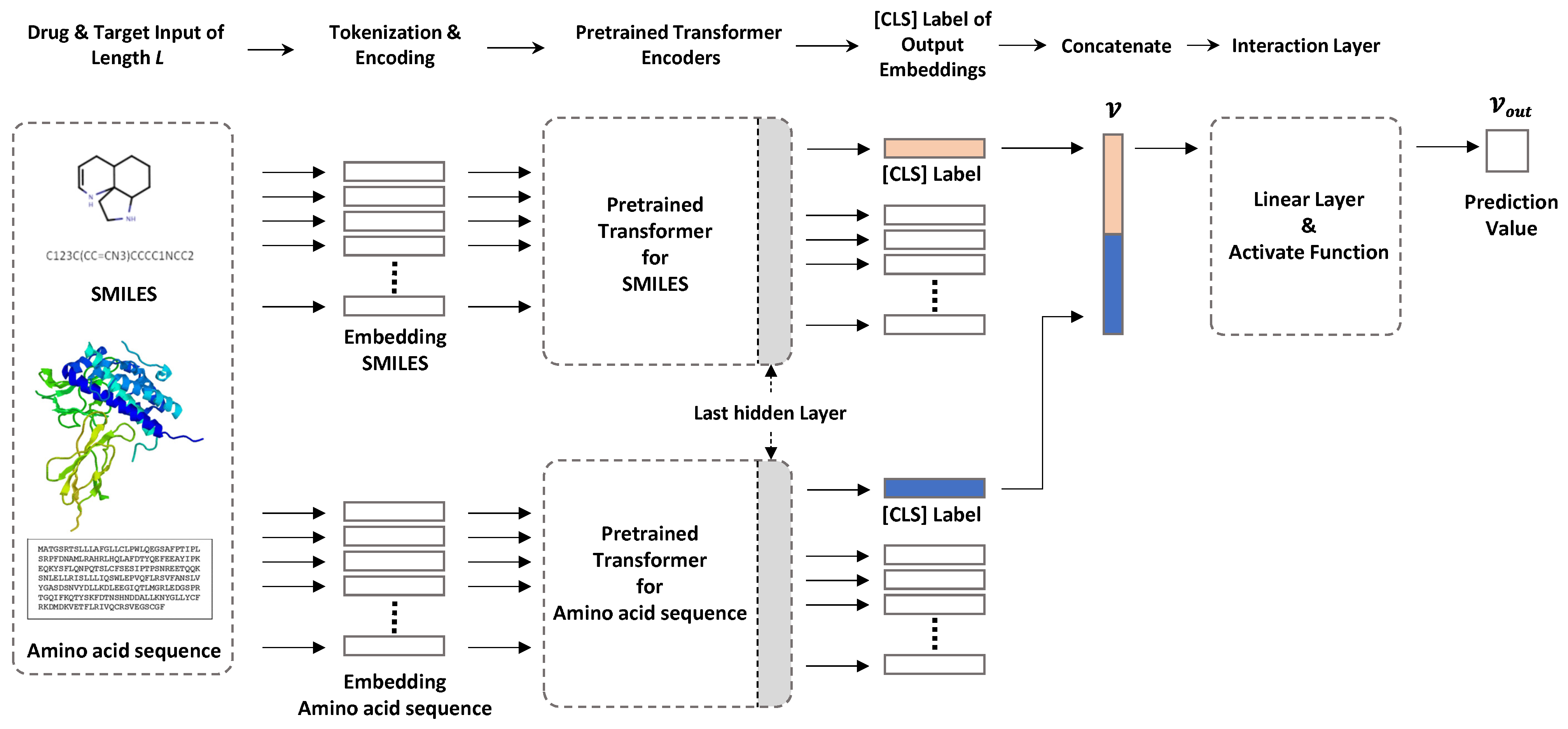

Concept placement using BERT trained by transforming and summarizing biomedical ontology structure - ScienceDirect

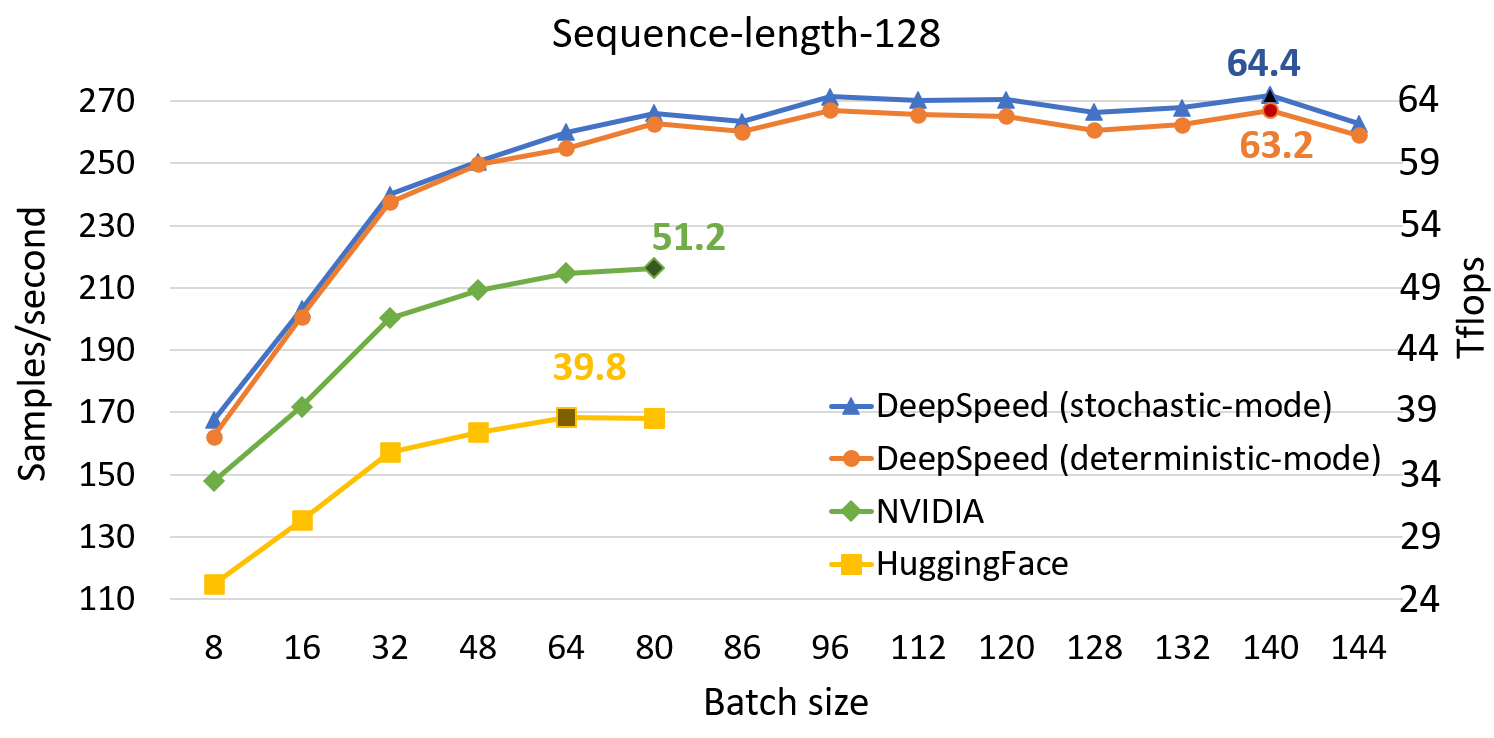

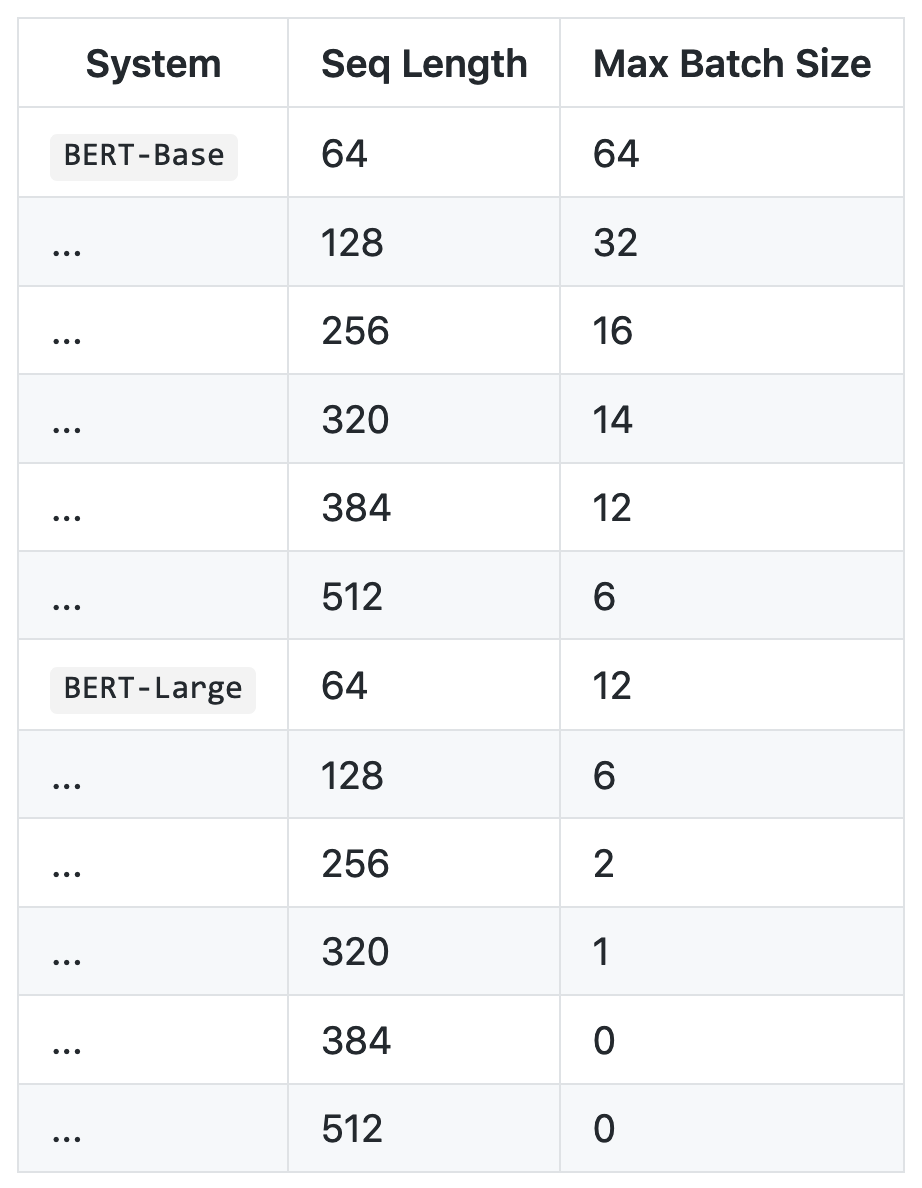

BERT inference on G4 instances using Apache MXNet and GluonNLP: 1 million requests for 20 cents | AWS Machine Learning Blog

BERT inference on G4 instances using Apache MXNet and GluonNLP: 1 million requests for 20 cents | AWS Machine Learning Blog